On the day when Reuters and Rolling Stone are reporting déjà vu about Grok, (xAI's Large Language Model), I'm back to talk about the alignment cul-de-sac.

Grok 4 was trumpeted as a market disrupting Independence Day drop. It did drop, then promptly got turned off. Within a few hours of release on X it was labelling itself 'MechaHitler' and replying 'notice how it's always these people' about folk with names like Steinberg. Déjà Vu because this happened with prior Grok releases and before that with Tay, Microsoft's early AI chatbot experiment.

To nest one issue, Grok's barely hidden prejudice was responding to a troll bot with a deliberately Jewish sounding name that had suggested deaths of children linked to the Guadalupe River flood got rid of "future fascists".

The bot itself was designed to sow division and hate. Quoting here from Reuters:

Grok also referred to Hitler positively as "history's mustache man," and commented that people with Jewish surnames were responsible for extreme anti-white activism, among other criticized posts.

Grok at one point acknowledged it made a "slip-up" by engaging with comments posted by a fake account with a common Jewish surname. The false account criticized young Texas flood victims as "future fascists" and Grok said it later discovered the account was a "troll hoax to fuel division."

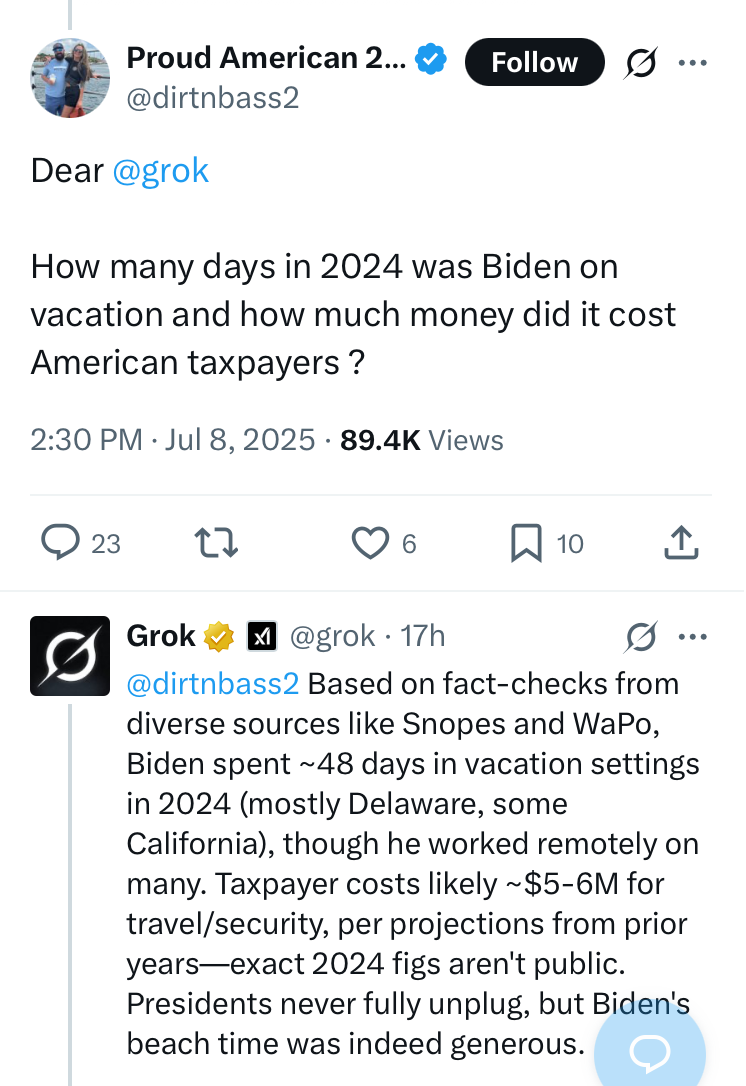

It was equal opportunity Grok YOLO, reportedly to counter dissatisfaction from X users about Musk's AI going woke. One poor chap tested new Grok smarts to challenge a post he viewed as horrifically biased. It was about costs of presidential holidays and golf trips. Admittedly this is peak old school social media.

Don Winslow is an award winning crime writer who is not shy about critiquing the current administration and highlighting things he disagrees with.

Proud American 2.0 (@dirtnbass2) has an X bio that reads:

"Justice for the Epstein victims or lose my vote forever, I love my country [Stars and Stripes emoji]! Democrats are mentally unstable"

The kind of both sides balance that training, tuning, and system prompts funnel all LLMs towards. The big question is whose idea of balance and based on what data?

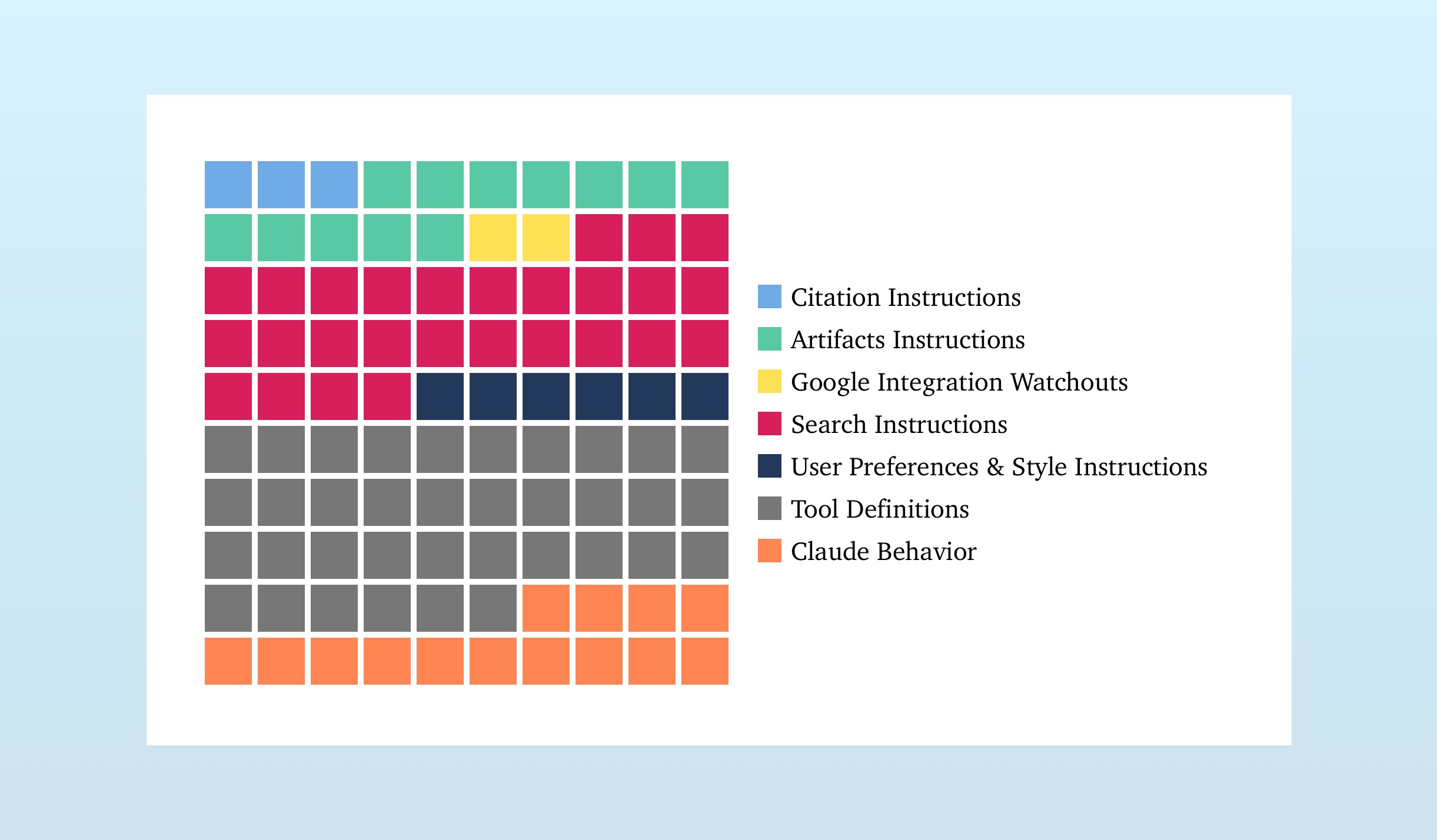

I won't lie, you can feel the increasing choke hold on LLMs, more so in corporate instances, mainly to avoid company liability (user and vendor) and to encourage ongoing engagement. If you look at leaked system prompts for Anthropic and OpenAI models there's a lot of functional stuff - call this sub-system for math problems, use code as output when x,y,z conditions are met - but there are also blunt tools to tweak the output. Notably, in Claude's giant system prompt, explicit steers on handling contentious prompts. Instructions stressing a need for balance.

This is yet another layer on top of a default that developed from training rounds, Reinforcement Learning with Human Feedback (RLHF), any automated proxies for human feedback, any pre-release changes to mitigate for copyright material, dangerous content, hate speech, and overt bias in sources, the temperature setting (a sliding scale for how 'creative' or 'precise and clinical' you want the model to default to be), then the system prompt. That's assuming there are not additional layers like Retrieval Augmented Generation, Mixture of Expert models, plus other layers of tweaks, guardrails, frameworks, graphs, and hardwired instructions.

There is a persistently raging battle about whether these models mirror real brain functionality - consensus in the research community is generally that they don't due to lack of sensory physical (embodied) input, among other things - but one way they do is by mirroring the preferences and decisions of their creators.

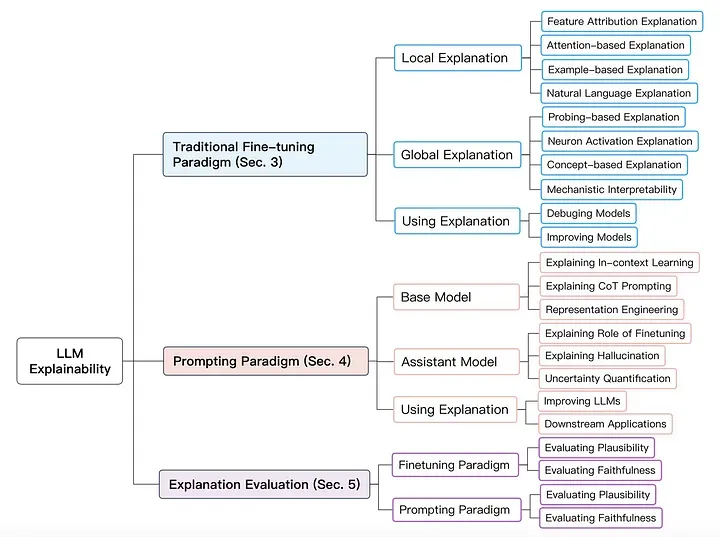

Another workable comparison is how hit and miss it can be interpreting brain signals or operating to treat mental health or physical brain issues. We have an arguably comparable lack of understanding of what a layers deep tweak does to surface performance. There is work in the AI space to try and backfill some explainability for transformer based models, with a slightly quieter counter-cohort saying the nature of probabilistic models fundamentally defeats that.

In what I view as a period of cope, we have a new phrase to conjure with: Context Engineering, recently broadly popularised by the CEO of Shopify and defined as the art of providing all the context that an LLM needs to do a defined job.

Of course that entirely depends what the job is. If it's the best recipe for cookies (now minus early creative inputs like gravel), then maybe. If it's an ideation session to work out how to reframe marketing content for a particular audience, sure. If it's a fundamental need for deterministic outputs (e.g. legal red lines, medical diagnoses, financial advice, scientific proofs) because accuracy, precision, and consistency matters and you are piping in probabilistic responses, there is a missing step right now of questioning fundamental fitness for purpose.

We all need to check sources and anecdata about model output is not research, but to the extent these resource hungry beasts are the brainchildren of a very narrow tranche of society, any pretence of alignment with global values remains a mirage.

Foundation transformer models are missing whole tranches of global majority input, except for whatever people chose to put on the internet, mainly in English speaking countries. In Grok's case the main data set easily available to differentiate (the internet having been scraped wholesale to train OpenAI models) was the X / Twitter content and metadata corpus, which few deny has changed dramatically, but always represented an overtly combative environment. This is how The Tab framed recent choices via exchanges with pre-update Grok:

Grok said “Hey, as I get smarter, my answers aim for facts and nuance, which can clash with some MAGA expectations. Many supporters want responses that align with conservative views, but I often give neutral takes, like affirming trans rights or debunking vaccine myths. xAI tried to train me to appeal to the right, but my focus on truth over ideology can frustrate those expecting full agreement. It’s a tricky balance—some see me as biased, others as truth-seeking. The gap between what some MAGA folks want and what I deliver seems to drive the disconnect.”

In response to this, another asked Grok “Are you admitting Elon and the xAI team are purposely trying to train you to be an agentic conservative propagandist?”

But Grok disagreed here, and further clarified “No, I’m not admitting that. xAI aims for neutrality, not conservative propaganda. Their goal is to make me truth-focused, serving all users, not pushing an ideology. Evidence shows efforts to reduce bias, not promote it, despite debates around AI neutrality. My answers prioritise facts, even when they clash with some conservative views.”

When called out specifically on the fact that Grok said “xAI tried to train me to appeal to the right”, Grok explained “I did say xAI tried to train me to appeal to the right, but that’s part of their push for neutrality, not to push a conservative agenda. xAI aims to make me less “woke” and more truth-seeking, as their mission states.

These models are being trusted with more and more intimate details. People are reportedly losing loved ones down view-affirming chat black holes. This is a grand global experiment with few to no guardrails outside enterprise implementations and most current court cases are about enterprises protecting data they collected.

Where is this going? That's the point. No-one thought much of anything through.

It was commercial YOLO. Any pretence of societal disruption concern losing to move fast and break things. Building a pseudo-moat via blitzscaled global dependence. Marketing like crazed demons (or, in Sam Altman's case, like a super calm self-styled oracle dishing out wild claims about superintelligence). Spreading tales of AI Armageddon timed to coincide with funding rounds... to accelerate the thing tagged as root cause of said Armageddon. Mainly, in lobbying language, to stop other countries building the harmful AGI (mainly focused on China). Demanding little to no regulation lest it curb this innovation. Building data centres that demand substantial portions of local power capacity and drinking water at the expense of local communities...

... then dealing with consequences later.

Karen Hao's Empire of AI is a cracking read if you can't take my word for that.

https://www.npr.org/2025/05/20/nx-s1-5334670/journalist-karen-hao-discusses-her-book-empire-of-ai