That is the state some politicised content has been trying to provoke via flooding the zone and other such shenanigans, but this isn't just about overwhelming noise from the US and new allies. This is about dawning realisation that GenAI has a huge output validation challenge. Or - put another way - a Validation Paradox.

Back to the Tech Disruption Future

Before the big public release of ChatGPT I started dedicating lots of time to getting my head around what was coming and what was already there in AI / ML spaces. Then OpenAI did what they did. From day one I worried at the question of output verification. How did we plan to service that need at a whole new scale? How would we confirm content, decisions, or actions were accurate, real, or otherwise fit for purpose? I'm late to this game by some yardsticks, but a long time player by many others.

Eye-rolling through round after round of 'We can totally replace X, Y, Z subset of jobs with GenAI / RAG supplemented GenAI / reasoning models / agentic models'. But who will create, clean, and structure data? Who will check if buckets of wildly variable code, data, documents, and instructions are good enough?

I've talked about overenthusiastic off-boarding or TUPE of specialists during various IT outsourcing and cloud migration waves. The hasty hiring back of folk who got the local environment and integration challenges. Things that didn't show up in the ROI decks from most of the consultancies and vendors.

That is the 'damn' part of the damn weary. Here we go again. The solution will likely be cast as a management science epiphany. 'Humanising AI Agents for Optimum Results' via HBR, or some such bollocks. Ignoring the opportunity costs of other 'AI' paths less travelled in the financial feeding frenzy.

A quick pause to apologise for the language and tone of the blog lately. I did explain that this is writing to think. Thinking right now needs lots of noise cancellation, so the signal to noise ratio can be a little unhelpful.

The Klarna Saga, DOGE, and Lack of Validation

Klarna's AI chatbot deployment partly illustrates what I've termed the Validation Paradox if taken at face value - where post-implementation validation and quality control costs can significantly erode advertised generative AI savings.

The Headline vs Reality Gap

The initial headlines were striking. Klarna announced its OpenAI-powered chatbot could handle the workload of 700 full-time customer service agents, projecting a £31 million profit improvement in 2024. CEO Sebastian Siemiatkowski framed this as inevitable disruption:

We are adapting the Klarna organisation... Unfortunately, this means we need to make around 700 roles redundant amongst our employees. Our Assessment is that this will affect all parts of the organisation.

However, when The Pragmatic Engineer site examined the chatbot's capabilities they revealed it primarily handled basic level 1 support - working on documentation retrieval and injecting order context. Anything complex reportedly triggered an immediate human handoff.

AI-Washing Budget Reductions

Klarna likely wasn't ignorant of current GenAI validation challenges, but probably 'La La La'd past quantifying the scale of that burden. There also appears to be an element of 'AI-washing' - using AI as cover for strategically convenient headcount and budget reductions that were likely already planned.

This pattern mirrors what we're seeing with federal staff reductions under Musk's Department of Government Efficiency (DOGE), where AI is being positioned as a decision-making tool to determine which jobs are mission-critical. In both cases AI serving as justification for cuts that might otherwise face more resistance.

The Gentleman's Layoffs

Both Klarna and Musk operate with clear monetary targets - Klarna aiming to prettify bottom lines ahead of an IPO and Musk pursuing a variable number of trillions in budget cuts. The key difference is that Siemiatkowski wants Klarna to continue functioning, whereas Musk's activities with USAID, the Consumer Financial Protection Bureau, and other agencies suggest a more destructive intent.

The Validation Reality

After the initial fanfare and layoff announcements, Klarna's position evolved significantly. The company reportedly did not follow through with the promised hiring freeze. Siemiatkowski later pivoted dramatically, posting this on X:

At Klarna, we've come to realize that exceptional customer experience is fundamentally about our people. While our AI assistant handles routine inquiries brilliantly, we're now investing more in our human teams who manage complex cases that require empathy and specialized knowledge.

This represents a stark shift from the absolutist automation-centric narrative to acknowledging the essential nature of the human service elements.

The Pattern of Rehiring

Like many cost-cutting initiatives, Klarna has followed a predictable pattern of selective rehiring in critical areas. This again mirrors DOGE's approach where key personnel were quietly brought back - as seen with the FDA's medical devices division and nuclear safety personnel (among many others reinstated).

The Less Acknowledged Costs

The Validation Paradox mainly manifests through necessary post production activities:

- Integration and Orchestration: Post implementation challenges and changes to ensure outputs and actions remain fit for purpose in complex networks.

- Technical validation: Monitoring for prompt injections, hallucinations, errors through agentic workflows, and other functional/non-functional issues.

- Quality Assurance: Ensuring outputs maintain accuracy, consistency, safety, customer service standards, and other quality related service levels.

- Regulatory compliance: Critical for a fintech handling sensitive data.

- Content tuning: Refining responses based on customer feedback.

- Human escalation teams: Required for monitoring and complex queries.

These costs are rarely calculated at early stages of change, development, or procurement as they are fundamentally dependent on operational context. A lot of this work will already be happening, without AI specifics, but the delta for the new overhead (with more blackbox elements and skill shortages) is being underestimated. The challenge isn't whether GenAI can handle simple tasks, it's whether savings from attempted automation outweigh costs ongoing. Costs to check AI outputs, handle technical monitoring, manage exceptions, collect and process feedback, investigate issues, and integrate lessons learned.

Culling Technology Oversight Functions While Deploying AI

The article below is about the firing of the 18F team from the General Services Administration. The team within GSA helped federal agencies improve technology, digital services, and user experience. Founded in 2014 as part of a broader effort to modernise government IT and make it more efficient, secure, and user-friendly.

Geared up as a team to support exactly the kind of novel tech due diligence I called out as vital earlier in this post.

Key Roles of 18F:

- Digital Modernisation: Helped agencies update outdated systems and adopt modern, agile development practices.

- User-Centred Design: Ensured that government digital services are designed around the needs of citizens and stakeholders.

- Agile Software Development: Used iterative, agile methodologies to quickly develop and improve digital products.

- Procurement & Acquisition Support: Assisted agencies in adopting better procurement practices for digital services.

- Open Source Advocacy: Encouraged the use of open-source technology to increase transparency, security, and collaboration.

- Security & Compliance: Helped agencies meet federal security and compliance standards, such as FedRAMP for cloud services.

Mark Cuban's offer to bankroll creation of a consultancy for fired 18F staff would ironically achieve the opposite of stated DOGE intent. As the article says:

The government using private contractors doesn’t make things cheaper. Consultants make a premium for their services, and when you’ve got a single client like the U.S. government with almost unlimited funds, the tendency is to milk them for all its worth. Just ask all those companies building jets and nukes for the Pentagon.

Cuban is pitching a future that’s similar to what Musk and Trump want. One where private interests make a ton of money off the U.S. taxpayer and nothing is ever done for the greater good but only for the greater profit. In this situation, at least the good people of 18F could be the ones reaping the rewards.

Bigger Pictures

The Validation Paradox isn't just about technology, it's about the disconnect between headline-grabbing cost reduction announcements and more general operational realities. Things that are typically very hard to quantify, like creativity, deep local knowledge, and means to understand complex systems like businesses. Or, at a whole new order of magnitude, the federal government. The pattern is the same. Advertise savings, efficiency, and waste elimination to bolster a valuation or personal capital, then worry about messy contextual reality later.

If (as clearly evidenced in many of Musk's actions and comments), debilitating functions is the real goal, it is necessary to paint impact as somehow desirable. Increasingly hard with activity within government agencies. In particular latest work around social security. Laid off customer service agents may not have a loud collective voice. Not so much with government workers and impacted Republican constituents who attend town halls, including a large number of veterans and internationally valued experts.

Hiring back once trust and system operation has been severely disrupted, is likely an entirely different equation. There are strong signs that other nation states are also taking advantage of discarded intellectual capital.

We have to ask ourselves about the medium and longer-term cost against the geopolitical and market signals backdrop. That is what people without significant wealth are watching. Nervously eyeing their bills and pensions.

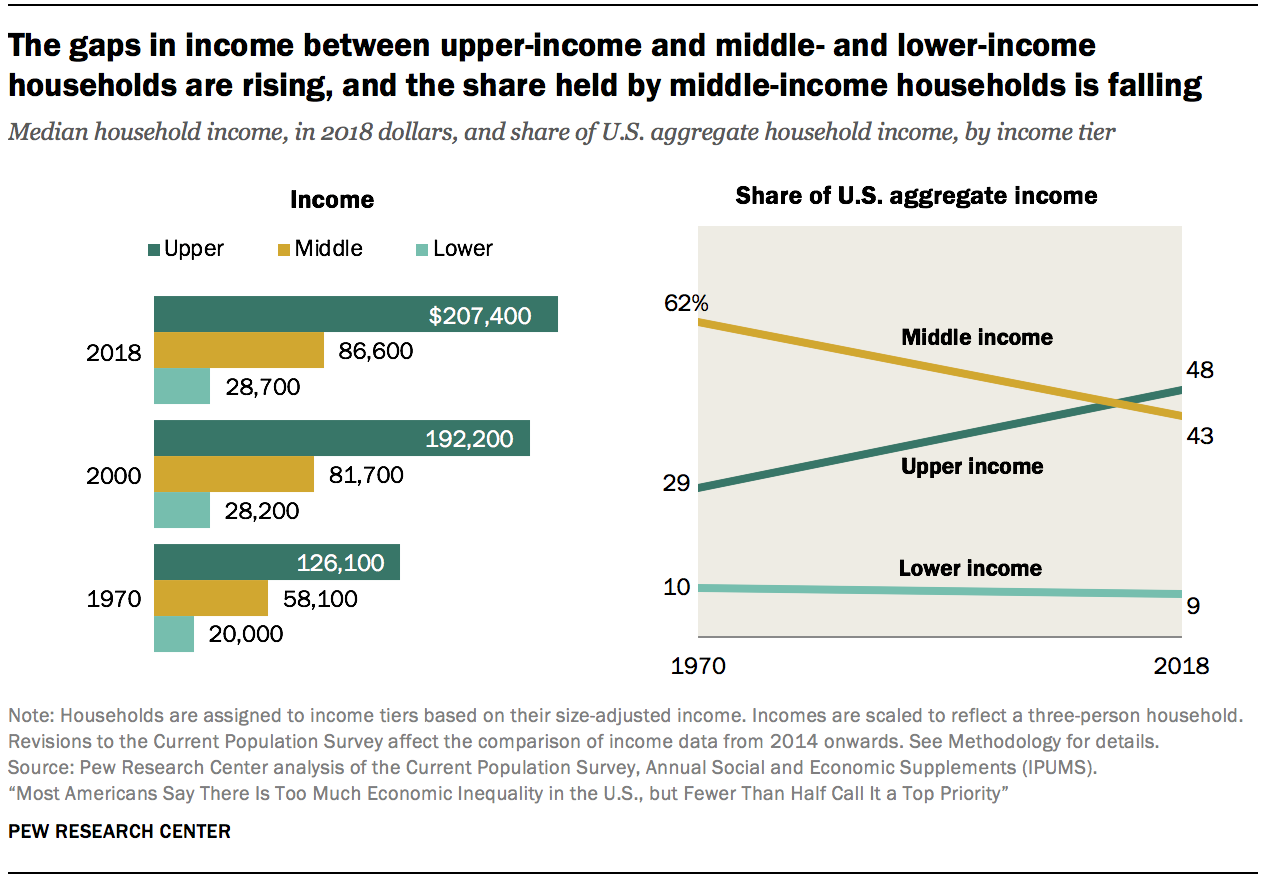

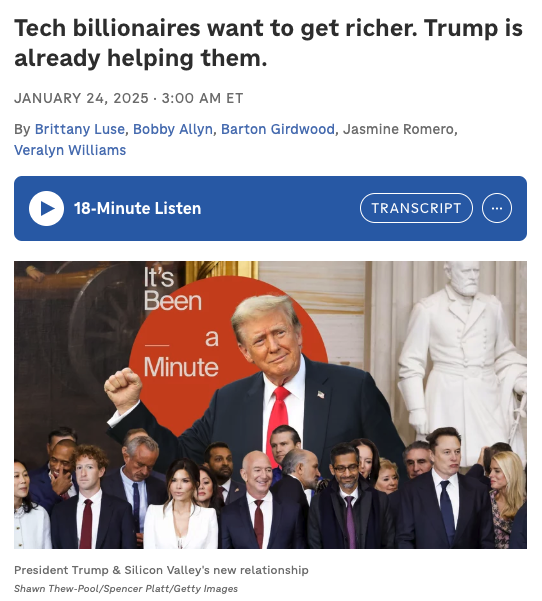

Tech bubbles based on speculative excessive investment (far beyond realistic short-term returns), devastating the US's largest employer, trade wars (the link is to the Tax Foundation tracker for Trump's tariffs), asset and service privatisation (such as reported plans for GSA to sell hundreds of government buildings, likely to lease back), radical deregulation. Removal of consumer and worker protections. The Pew Research shows some related historical effects (1970- 2020).

The mid-noughties are calling. They want to fist bump the guys who are turbo-charging that playbook with a whole new kind of pain in prospect. Wealth and property transferred away from almost everyone to the most wealthy (very transparently in the Republican budget). Providing bolstered liquid capital and fewer checks on buying the dips. Who supports the government to do that?

Or maybe not. Maybe I'm wrong.

Let's all hope so, because the ripples would be long-lasting and global. Short-term focus on shareholder returns with progressive removal of limits, protections, and sanctions for exploitative excesses. Things with a predictably cyclical shelf-life.