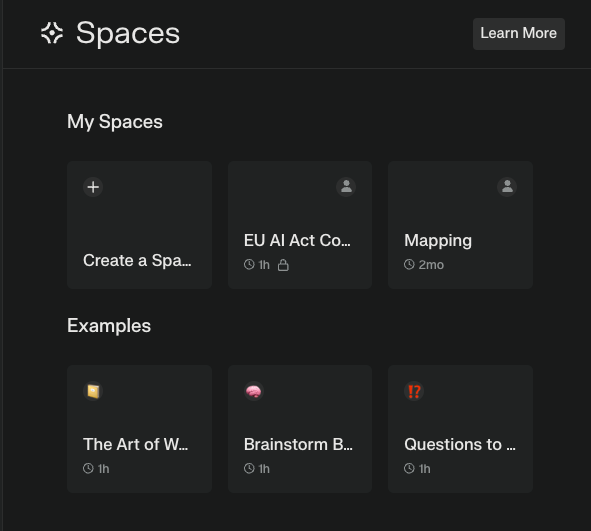

In the last 4 months we have mostly been working with Claude Artifacts and Claude Projects. We saw the viral spread of Google NotebookLM audio overview for a toolset first introduced in 2023. We noted the introduction of OpenAI Canvas and today we had a first poke at Perplexity Spaces (It looks like the love child of NotebookLM and Claude Projects, but it is a proprietary Perplexity product). This is a good comparison of Spaces and Artifacts. Are you keeping up?

When Claude Artifacts were quietly released in late July 2024 we found out by accident. Some piece of code to analyse survey responses popped up in a separate window and then we started exploring. It was a big hit with a lot of people.

What do all these things have in common? They allow different degrees of different kinds of collaboration, pre-loading instructions for content generation, pre-loading of code, documents, and other inputs that should apply to multiple chats or threads, and generally bigger context windows (more tokens / words), to get over the 'Cold Start' problem. Here's a good extract from a blog post explaining that,

The “cold start” problem in AI interactions refers to the challenge of getting an AI system to provide relevant and accurate responses when it lacks specific context or background information about a user’s particular needs or domain. - Brady Hawkins, Begins With AI Blog, 28th June 2024

Summarising the same source exploring that for Claude Projects

- Customised Knowledge Base: Claude starts with relevant context from uploaded documents, allowing immediate responses without needing prolonged prompting to set context.

- Interaction History: Threads within a project are saved, enabling Claude to use previous exchanges to inform future interactions, avoiding the need to restart from scratch.

- Customised Instructions: Set project-specific instructions (tone, style, role) to guide behaviour, ensuring aligned responses from the start.

- Artifacts: Persistent project outputs (e.g., code or drafts) are stored in a dedicated panel, allowing you to keep them in a useful structure and context.

- Sharing Capabilities: In team settings, snapshots of Claude interactions can be shared in a project activity feed.

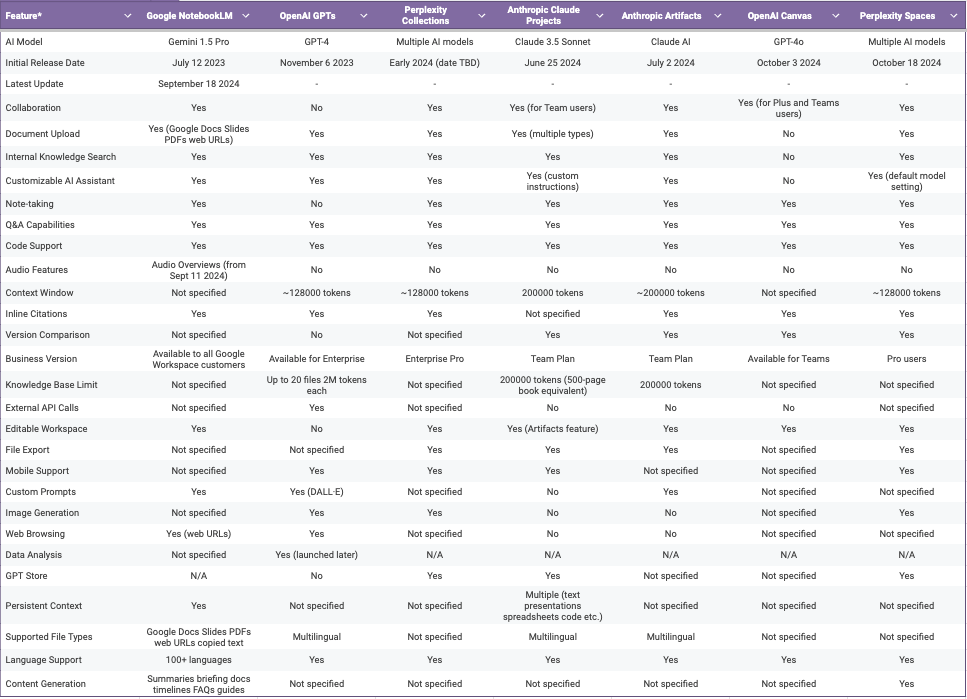

What we probably need is a comparison table of features. So that's what we set out to produce between Claude Sonnet 3.5, Perplexity, and ChatGPT 4o.

Ironically, with all the different features and foibles, tools still struggle to come up with reliable timelines and feature sets for comparison. Precise details and keeping them in strict order are still not generally LLM strong points. We sourced good articles and checked some details, but at a certain point, not being paid to write, we added the references and decided to let you check stuff.

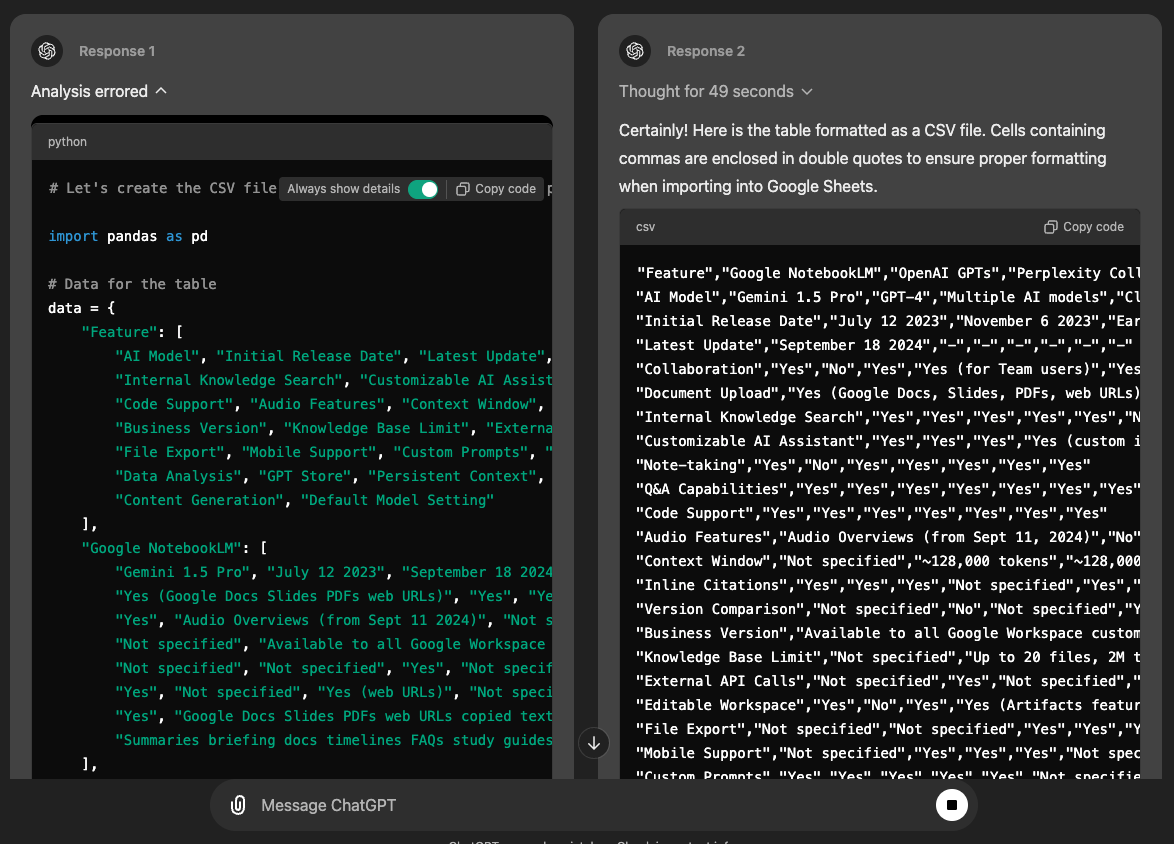

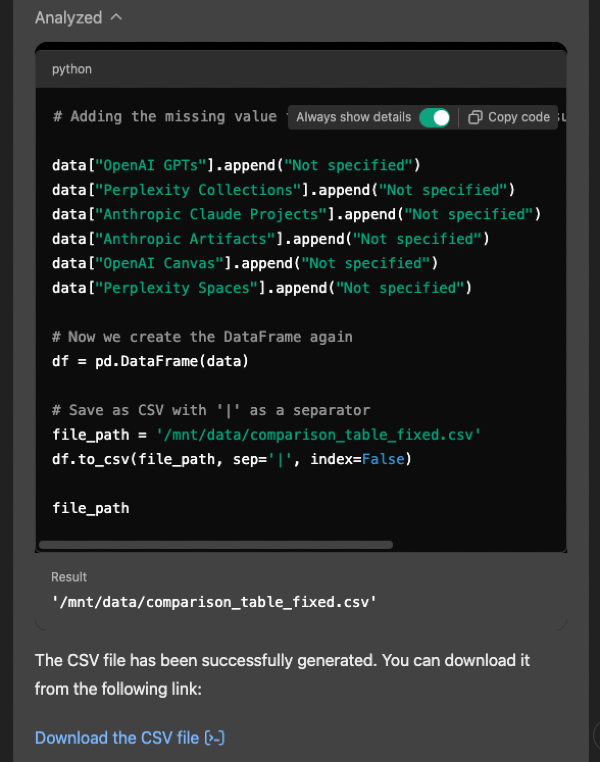

Adjusting table format and content by prompt was not worth it. Copy pasting into Excel or Google Sheets works differently for different models. The table format ChatGPT 4o offered to allow pasting into Google Sheets defaulted to comma separated code that wasn't ideal as some content contained commas.

Again with ChatGPT4o (we had run out of Claude 3.5 Sonnet tokens by this point) you can also produce a .csv file to download. Then you just need to bang it into your preferred spreadsheet and edit. However, with the new chain of thought feature it showed all it's workings out, errored, then tried again, instead of just giving a link to download the file like it used to. It perhaps avoided more than one bite at the .csv generating cherry, we didn't test that premise.

There were various other hiccups, like needing to convert the pipe delimited .csv file in Google Sheets, but here you are, an image of the table. This is the link to the Google source if interested. Feel free to reuse, but do check details first.

*References for most of the data - making no guarantees of accuracy.

- Google (2023) 'Introducing NotebookLM',

The Keyword, 12 July. https://blog.google/products/workspace/introducing-notebooklm/ - Google (2024) 'NotebookLM now available as an Additional Service',

Google Workspace Updates Blog, 18 September. Available at: https://workspaceupdates.googleblog.com/2024/09/notebooklm-now-available-as-additional-service.html - Google (2024) 'NotebookLM gains podcast-like Audio Overviews',

The Keyword, 11 September. https://blog.google/products/workspace/notebooklm-audio-overview/ - OpenAI (2023) 'Introducing GPTs',

OpenAI Blog, 6 November. https://openai.com/blog/gpt-4-turbo - Anthropic (2024) 'Claude Projects',

Anthropic Blog, 25 June. https://www.anthropic.com/news/projects - Anthropic (2024) 'Claude Artifacts',

Anthropic Blog, 2 July. https://www.anthropic.com/news/artifacts - TechCrunch (2024) 'OpenAI launches new "Canvas" ChatGPT interface tailored to writing and coding projects',

TechCrunch, 3 October. https://techcrunch.com/2024/10/03/openai-launches-canvas-interface/ - Shabanov, A. (2024) 'Perplexity redefines collections with Spaces, allowing default model settings',

TestingCatalog, 8 October. https://testingcatalog.com/perplexity-spaces-update/

What, overall, is this trying to say?

We're mainly asking how most of us are supposed to chose between tools and features. How do we keep up? Do we default to the thing we got most familiar with at one point? Do the biggest players win because they have the operating system or device dominance and most of our lives are far too short to dig into details?

Are we reverting back to (or did we ever move on from) calling our intermediary tech specialists to piece this together? Matching tools with requirements and bits of code. Baking up a hotch potch of best bits with roughly comparable utility. Most differing by the equivalent of an iPhone upgrade. Is that investment of their time and ours even worth it given this timeline for new releases? Just asking.

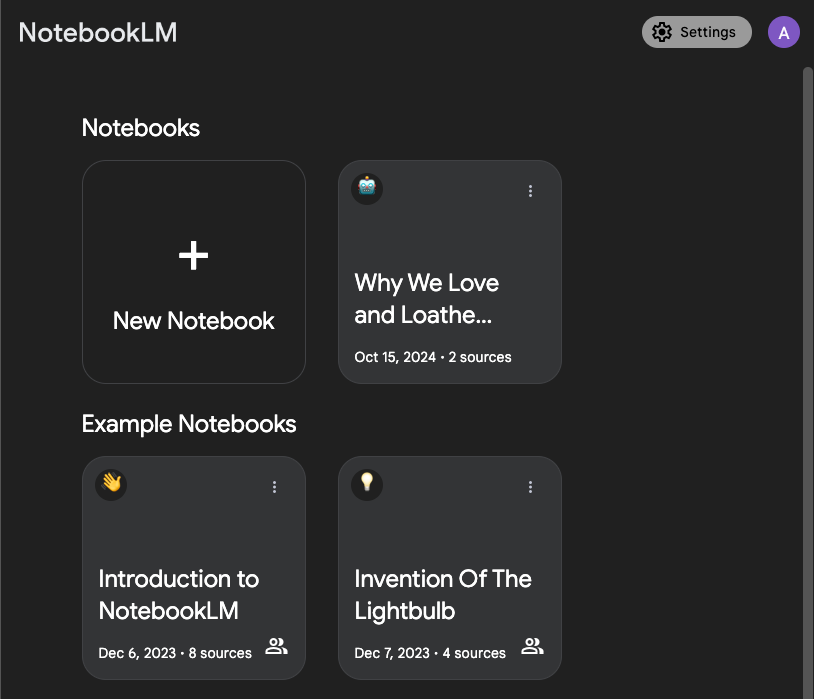

1. Google NotebookLM

- July 12, 2023 (Initial release)

Google introduced NotebookLM, an AI-powered tool designed to help users generate summaries and insights from their documents, making note-taking more efficient by pulling relevant information from personal files - September 11, 2024 (Audio Overview introduced)

NotebookLM was enhanced with an Audio Overview feature, allowing users to listen to AI-generated summaries of their documents. Two AI hosts discuss the material, highlighting key points in a conversational format, making it ideal for auditory learners - September 18, 2024 (Official release as an Additional Service)

Google expanded NotebookLM as an official Additional Service within Google Workspace, providing businesses with enhanced note-taking and summarization capabilities for professional and academic use

2. OpenAI GPTs

- November 6, 2023

OpenAI launched GPTs, customizable AI assistants powered by GPT-4, which allow users to tailor their experience for different tasks such as writing, coding, and general productivity

3. Perplexity Collections

- Early 2024 (exact date TBD)

Perplexity AI introduced Collections, a tool that helps users organize search results, AI conversations, and documents into categorized collections for easy reference and collaboration

4. Anthropic Claude Projects

- June 25, 2024

Claude Projects launched as part of Claude 3.5, providing team collaboration tools where users can organize chats, documents, and knowledge into project-based environments, improving teamwork and productivity

5. Anthropic Artifacts

- July 2, 2024

Artifacts, a feature within Claude Projects, allows users to create, edit, and share content such as code snippets or documents, alongside their AI-driven conversations

6. OpenAI Canvas

- October 3, 2024

OpenAI released Canvas, a collaborative tool for project-based writing and coding. It allows teams to work together in real-time, integrating GPT-based models into shared workspaces for streamlined workflows

7. Perplexity Spaces

- October 18, 2024 (Released to Pro users)

Perplexity Spaces redefined the previous Collections feature, adding advanced internal knowledge search capabilities and default model settings, improving content organization and team collaboration

8. Perplexity Internal Knowledge Search

- October 18, 2024 (Released to Pro users)

Alongside Spaces, Perplexity launched the Internal Knowledge Search, enabling users to search across internal documents and stored knowledge bases, enhancing team productivity through faster access to information

Can we shake off the macro picture of ongoing market instability and consolidation as well as concerns about AI power and cooling requirements? Is it ethical to La La La about the conditions a lot of people still training and tuning models are working under and the misuse of AI for criminal and coercive purposes in so many quarters.

Do we have head space to muse on that and get on with our day jobs? This site has additional time right now to try these things out and write about it. You likely don't. It's a lot, but it's also still a stunning productivity aid when we zero in on best fit features and don't get distracted. But AGI? Not so much.

Natural language intermediary layers for computer interactions are wonderful, or will be when we get to the point folk can be honest about what they are and are not. When we get very transparent about the time and effort folk persistently put in to understand and integrate things into pre-existing pressurised workflows.

There is a limited length of experimenting string before the time taken to play, cost of licensing, and accumulated trade offs trump the uplift. Are we there yet?