This is a post from a place of familiarity with 'Digital' projects and other more general technology integration. Most people who have not been inside those environments have little to no idea of the complexity.

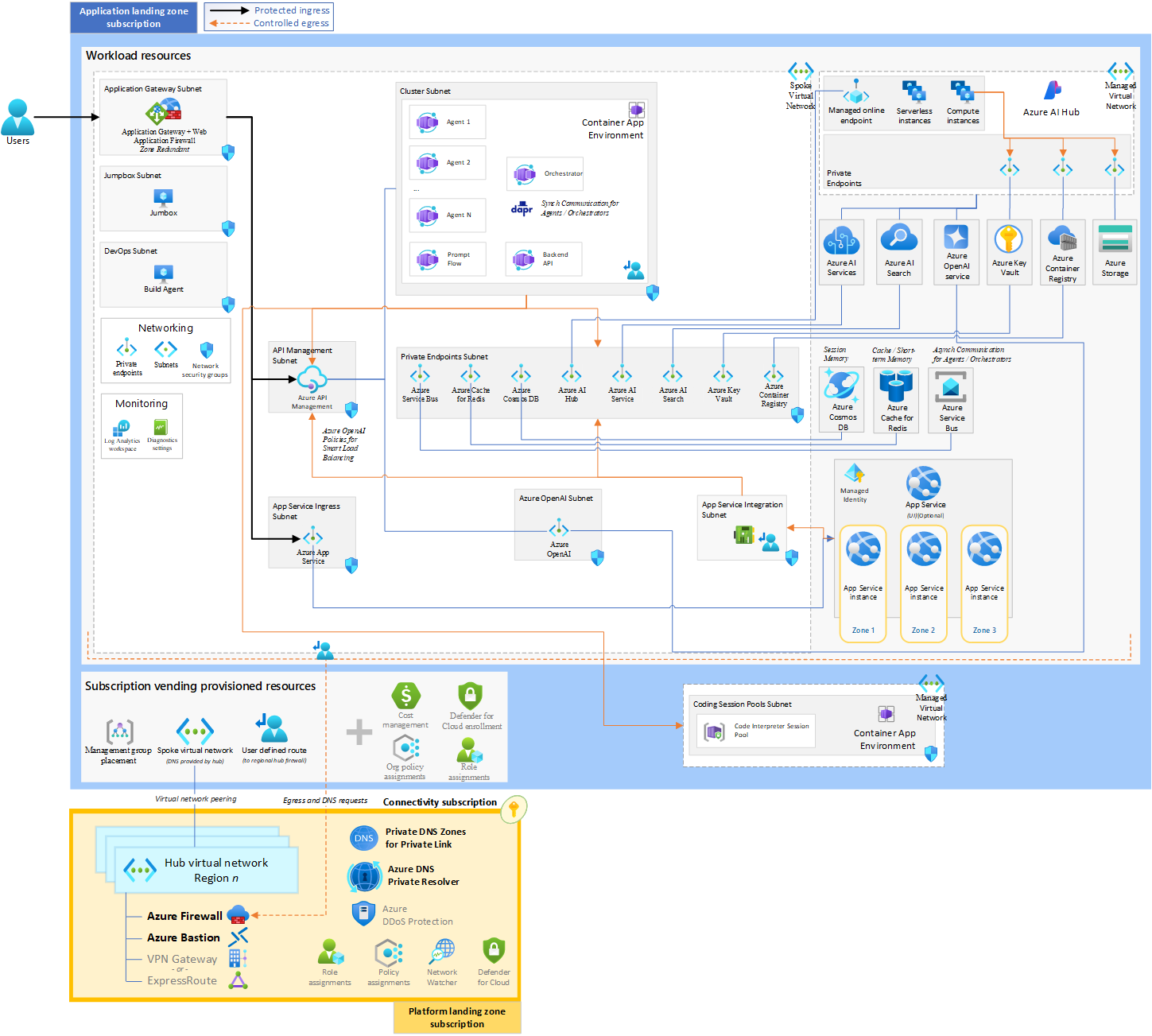

This image is from a Microsoft blog post about their baseline agentic AI architecture.

(Click the image to go to a high resolution version)

Shelfware is a term for things that seemed like a great idea, but for a variety of reasons they end up gathering dust vs generating value.

Perhaps due to lack of thought given to integration with existing systems (with or without lots of outsourced elements), perhaps due to rapid scaling without adequate consideration of downstream impacts.

One security-centric historical example is early Intrusion Detection Systems - tools that sit in the network, watching the traffic, seeking anomalies or signatures of known attacks. Traffic that suggests someone is exporting all of your CRM data, known indications of compromise (IoCs), and other traffic that looks suspicious. A large number of implementations spat out gigabytes of logs and thousands of alerts, often with fairly rudimentary analysis that little was done with.

Or perhaps, as more intuitive predecessor, think about the journey to value with many 'big data analytics' projects. The huge promise to monetise company data, everything thrown into big Hadoop instances, sometimes with elusive ROI.

For both examples there were dependencies on staff and a matrix of up and downstream requirements for inputs and outputs. Not least finding, categorising, cleaning, and labelling data.

Tuning for Local Reality

Back to IDS: A lot of local knowledge was needed to identify false positives (flags for issues that were not issues), false negatives (missing flags for real issues), and more general patterns to baseline a 'normal' state to improve analysis and alerting. That needed thresholds for alerts so staff didn't burn out forensically analysing every system burp.

In a more mature IDS world it is a practical input into Security Incident and Event Management (SIEM) systems and processes.

Data science was always in that mix, from blunt rules-based tools, via more sophisticated data analytics, to next generation (always next generation) machine learning. Now we have a new generation of AI to pick through data.

This is SecureWorks with a deeper historical dive.

IDS plus Latest AI Tools

There is enormous potential here. Not (in the main) from Generative AI, except as a language interface. Potentially helpful for initial output characterisation or categorisation and supporting report production, among some discrete other things. All that requiring careful validation.

Probabilistic assessment and generalisation has it's place in interpreting this kind of data, but when it comes to isolating compromised devices, impacted data, attack vectors, ongoing issues, specific fixes, and associated local network and system implications, it needs more deterministic analysis (analysis with clear traceability from inputs to outputs of the kind we largely can't do with GenAI) and deep local knowledge.

When the pockets of reliable network traffic analysis are bedded in, tuned for local traffic patterns, trained on realistic thresholds for alerting, and embedded in other related systems and processes, it is time to shuffle that off into Business As Usual (BAU) operations.

The path from needing deep expertise when assessing value and utility to turn the handle operations is not a short one.

Knowing the likely config, maintenance, and downstream effort burden, having clear eyes about the time to mature and scale of value, understanding trade offs (quality, security, privacy, safety, ethics etc) all vital. When you are working that out (or finding someone specialist and trustworthy to do it), is not generally when you see productivity uplift.

The Promise to PITA Journey

That is a path almost all new processes and tools take from generally marketed utility, via a pitch for customers (usually fairly light on detail), to local requirements definition, then potentially a proof of concept (formally, or informally).

Every such journey has 4 distinct alter-egos:

The Promise - PR, public demos, and high-level documentation

The Pitch - Technical pre-sales

The Pilot - That formal or informal suck it and see step

The Product - The first fully featured production rollout

With a fifth alter-ego if the first four force a bad date with complex reality

The Pivot - The shift when things didn't do quite what you hoped

And the alternative if the union is initially blessed with pieces fitting:

The PITA - The care and feeding for the rest of the infrastructure, software, system, data, process, and vendor relationship lifetime

Yes, that stands for Pain In The Ass.

Within the restriction of words or acronyms beginning with 'P', that is not a bad approximation of the general micro, mid-range, and macro trajectory. Almost all of that, except the Promise and the user-centric PITA is invisible to a general consumer population and (too often) the board.

The GenAI Journey From Promise to Production

For a GenAI-specific implementation this is where the true journey generally begins. The reality of that 'simple implementation' with pre-trained models:

Initial requirements:

Business processes need to be mapped while considering touch points and dependencies. Data doesn't just need to be found - it needs proper classification, ideally with security and data protection by design. Integration points often aren't simple connections. They can be complex intersections demanding novel connections, especially for legacy systems.

Performance expectations need to be set with brutal honesty, and success criteria must be defined before the rose-tinted glasses come off.

Testing

Testing can become a labyrinth of interconnected challenges. Prompt engineering morphs from simple input-output to a complex dance of context and nuance. Quality assessment never ends, as edge cases multiply. Performance benchmarking often delivers unwelcome surprises, while security testing uncovers integration issues that marketing never mentioned. User acceptance testing frequently sends teams back to drawing boards.

Tuning

A necessity that can transform into an endless cycle of adjustments. Context windows need constant tweaking, token usage demands careful balancing against mounting costs, and response consistency requires attention. Hallucinations - those confidently creative, but unpredictably incorrect outputs - demand sophisticated mitigation approaches. Integrated performance may not match initial expectations, leading to optimisation cycles.

Governance

Doncha love to hate it, but if you can't keep receipts and interpret them in aggregate it can emerge as the hidden iceberg. Data and model governance frameworks need transformation as traditional approaches often falter against AI's probabilistic nature. Usage monitoring, audit trails, and access controls grow increasingly complex, while risk assessments need to evolve.

Assessments struggle to tackle a still poorly understood threat and vulnerability landscape. Performance monitoring generates its own data challenges. Cost tracking can reveal uncomfortable truths as the GenAI market evolves (a new $200 per month OpenAI subscription tier for their newest model for example), while output quality monitoring points to new areas needing attention. Old style auditing and risk reviews are increasingly not fit for this novel purpose

Feedback loops

This fundamental requirement is too often just given lip service when it comes to involving downstream users. User feedback needs systematic collection and analysis. Performance metrics need to be created to fill gaps in standardised benchmarking for models. Cost-benefit assessments are begging for inputs we often don't have yet (happy second birthday to initial release of ChatGPT).

Aggregate and nett improvements often don't match initial expectations, leading to unplanned requirement refinements and optimisations.

Changes to Initial GenAI Releases

What started as an implementation of a Large Language Model via an API has evolved significantly over the past two years. Each evolution adding new considerations:

Multi-Modal Expansion:

- Text-to-image generation

- Image-to-text analysis

- Audio processing

- Video understanding

- Multi-modal integrations

RAG Developments:

- Vector database integration

- Knowledge base management

- Real-time data integration

- Context window optimisation

- Source verification mechanisms

Small Model Evolution:

- Edge-optimised models

- Domain-specific models

- Precision vs speed trade offs

- Quantization (engineering for that and other trade offs)

- Latency optimisation

Edge Computing Requirements:

- Device compatibility

- Offline capabilities

- Model compression

- Performance optimisation

- Security and privacy considerations

Agentic AI Complications:

- Action planning capabilities

- API integration requirements

- Safe connected systems integrations

- Monitoring all interactions

- Updated control frameworks

- Feedback mechanisms

The 2024 Pitch and Reality Check

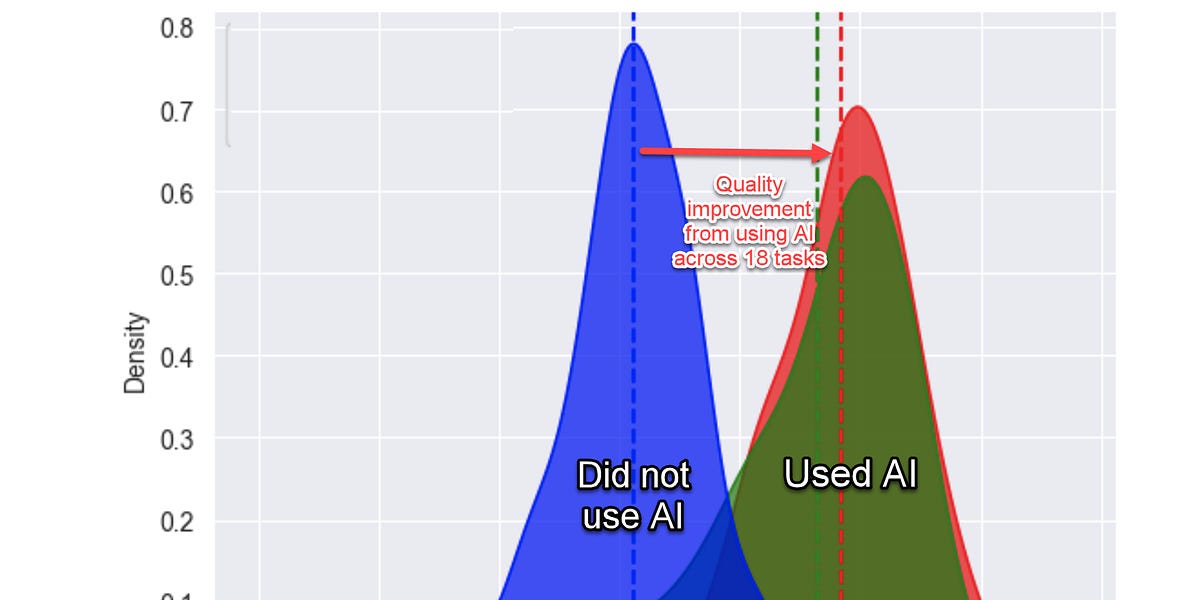

Consultants using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without. Those are some very big impacts.

Ethan Mollick from the below post.

Mollick headlined with that 40% quality uplift for management consultants during a big ChatGPT trial at Boston Consulting Group.

That was selective interpretation of the full BCG paper published in April 2024. Lots has happened in the generative AI market, but not enough to wipe out implementation, monitoring, and maintenance challenges. Challenges not dissimilar to IDS and Big Data projects.

Adopting generative AI is a massive change management effort. The job of the leader is to help people use the new technology in the right way, for the right tasks and to continually adjust and adapt in the face of GenAI’s ever-expanding frontier.

From the referenced BCG paper

Even allowing for firms that just deploy LLMs or pre-packaged systems like MS Office with Copilot, the initial claims of productivity uplift are far more nuanced, as both Mr Mollick and the BCG study get to.

By some estimates, more than 80 percent of AI projects fail — twice the rate of failure for information technology projects that do not involve AI. Thus, understanding how to translate AI's enormous potential into concrete results remains an urgent challenge.

RAND Org, August 2024

Key Findings

Five leading root causes of the failure of AI projects were suggested:

First, industry stakeholders often misunderstand — or miscommunicate — what problem needs to be solved using AI.

Second, many AI projects fail because the organization lacks the necessary data to adequately train an effective AI model.

Third, in some cases, AI projects fail because the organization focuses more on using the latest and greatest technology than on solving real problems for their intended users.

Fourth, organizations might not have adequate infrastructure to manage their data and deploy completed AI models, which increases the likelihood of project failure.

Finally, in some cases, AI projects fail because the technology is applied to problems that are too difficult for AI to solve.

RAND's summary highlights very familiar implementation complexities, with organisations discovering that the journey from Promise to PITA (and beyond to stabilised value) involves significantly more steps than initially marketed.

What started as "just API calls to pre-trained models" has evolved into a complex ecosystem requiring:

- Sophisticated integration architectures

- Robust security frameworks

- Comprehensive governance systems

- Detailed monitoring capabilities

- Complex feedback mechanisms

Vendors of the solutions for some of these integration, assurance, and monitoring challenges are often the same companies offering the AI tools. Amazon, Microsoft, Google, NVIDIA etc.

That is a huge amount of ongoing dependence, but not an unfamiliar picture for customers.

Just when we were seeing some small signs of a swing back to self-hosting as cloud compute costs continued to rise, the world of AI precludes that for many due to the sheer scale of compute needed. There are many such nested strategic challenges.

Chicanes and Chicanery vs Straight Paths to Productivity

The path from Promise to PITA and eventual BAU operation is less straight line and more peaks, troughs, pivots, and switchbacks (analogous and linked to those playing out in the AI product space). Each bringing its own set of shifting requirements.

When LinkedIn co-founder and Greylock partner Reid Hoffman first coined the term “blitzscaling,” he kept it simple: It’s a concept that encourages entrepreneurs to prioritize speed over efficiency during a period of uncertainty. Years later, founders are navigating a pandemic, perhaps the most uncertain period of their lives, and Hoffman has a clarification to make.

“Blitzscaling itself isn’t the goal,” Hoffman said during TechCrunch Disrupt 2021. “Blitzscaling is being inefficient; it’s spending capital inefficiently and hiring inefficiently; it’s being uncertain about your business model; and those are not good things.” Instead, he said, blitzscaling is a choice companies may have to make for a set period of time to outpace a competitor or react to a pandemic rather than a route to take from idea to IPO.

The question remains: How many of these market evolutions were on original vendor roadmaps and how many represent pivots in response to limitations? The rapid pace of development involves releasing first and refining later while courting and burning huge quantities of compute and capital, entirely in keeping with blitzcaling to market dominance. What does that tell us about promises after the pin on the GenAI grenade was pulled by OpenAI almost exactly 2 years ago?

To what extent are we been pulled by vendor dictated change rather than collaborating via market signals to inform evolution? How much of the data in feedback loops from customer to vendor can or will roll into next iterative steps? To what extent does that matter, depending on large vendor strategies?

Costs and Benefits of Pausing for Thought

Perhaps time to retreat from worries about AImageddon (we have another few choruses of that due with the agentic AI marketing). There will likely be existential risk flares aplenty about semi-autonomous steps to buy things, respond to customer service calls, or raise tickets in systems.

In parallel we have macro resource challenges; data shortages, ongoing copyright challenges, and firms contracting experts to refine AI inputs and outputs. A shift from initial Reinforcement Learning with Human Feedback (RLHF) that was often carried out (and still is for model retraining) by people in countries with far fewer employment opportunities and too often precarious terms and conditions.

This has all the signs of swans serenely swimming on the surface, attracting the lion's share of the breadcrumbs from adoring observers, but paddling like crazy to stay afloat. Not to mention the bread shop may close and it is never good food for such birds, it bloats, breeds dependence, and devalues less harmful sustenance.

Instead of labouring that metaphor (or actively strangling it), I'll leave you with a last few things to consider.

Early entry is great, whether forced by a thoroughly wooed board or initial confirmed utility, but a well informed second follower often reaps longer-term rewards, or can at least avoid a chunk of sunk costs. That really is a PITA.

What do you practically need to avoid the shiny new AI solution getting shelved? Did you buy into GenAI as a staff replacement technology when no-one was looking, but advertise as work enhancement in public? Is that aligned with reality? Who can you trust to have that conversation? Doing that is vital.

Maybe more practical detail to tackle that FOMO another time.

Apologies for not being a bright ray of AI sunshine (there are arguably plenty performing that function). This was solely attempting to paint a picture of why so many tech governance and tech operations bodies are skeptical and weary.

Many, believe it or not, are excited about new capabilities, where a credible option, but many others are sick to the back teeth of hype that makes their jobs harder (apologising to any tech or GRC folk who I have mischaracterised).